May 2023 – March 2024

Role: UX Designer/Researcher, Unity3D Developer

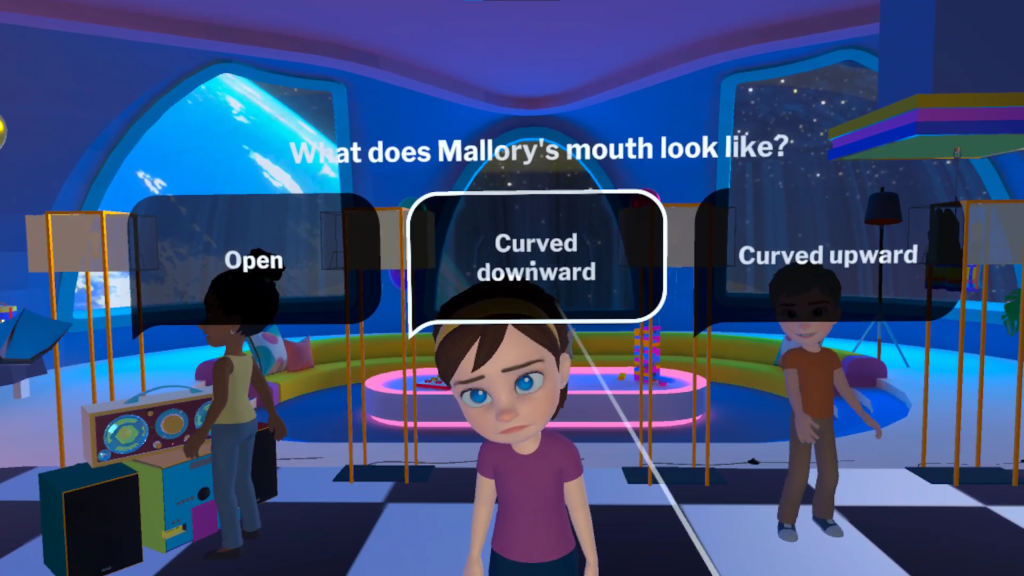

The Clark-Hill Institute for Positive Youth Development at Virginia Commonwealth University collaborated with Brain Spice Labs to revamp an existing web-based program and create an entertaining and informative virtual reality experience for children. Totaling over one hour of content, the application utilized motion capture, interactive games, an overarching story-line, and engaging lessons.

Background

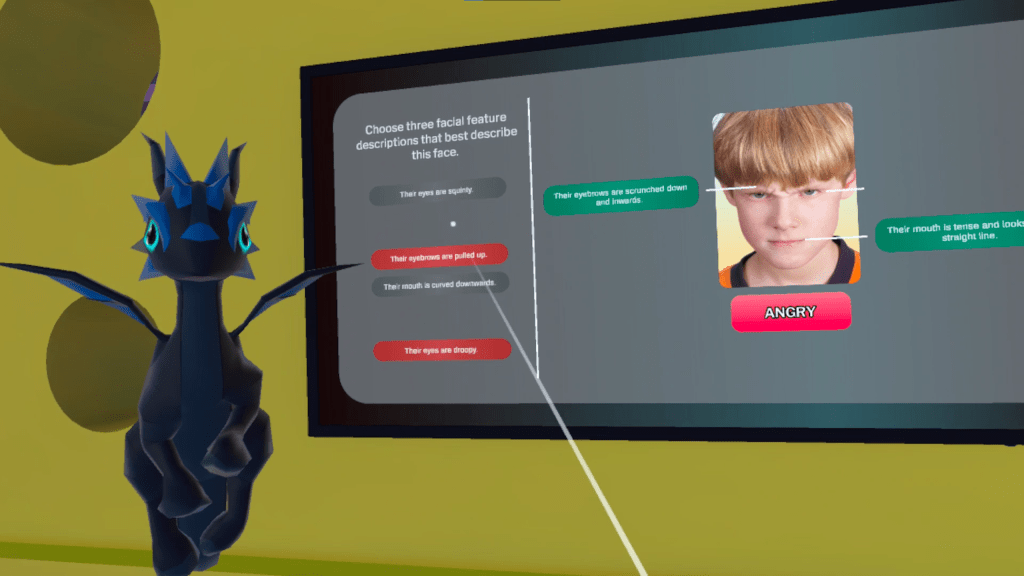

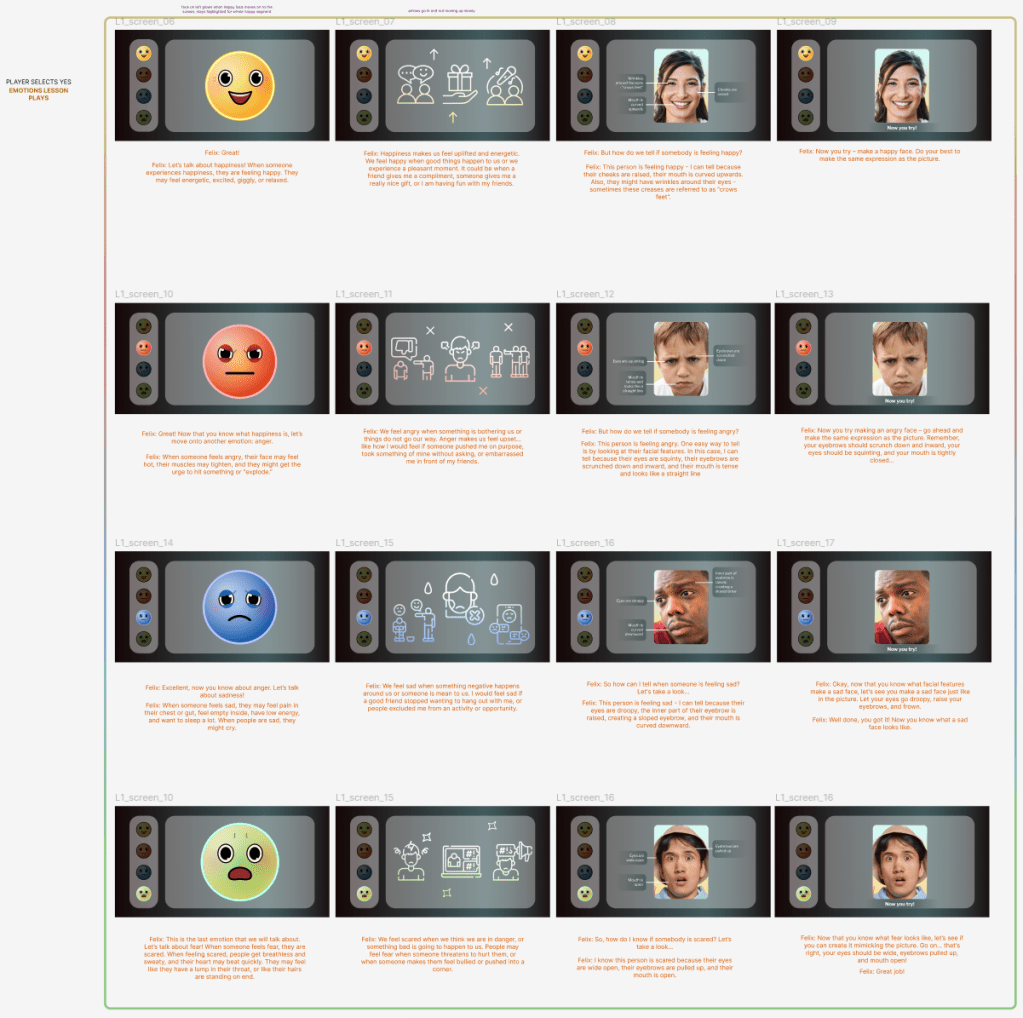

The existing training program, Cardiff Emotion Recognition Training (CERT) was developed years ago and had plenty of information but very little engaging activities. The goal of the new trainer was to help children with specific mental health needs (e.g. conduct disorder) recognize facial expressions and understand what these emotions mean.

Design Goals

- Visual – Stylize design similar to current TV shows for the age group of 8-17

- Device – Develop a standalone Meta Quest Pro application

- Personal Guide – Introduce a non-human guide character

- Data Collection – Collect data from baseline assessments and post assessments

- Educational Content – Create user-dependent lessons featuring content provided by VCU

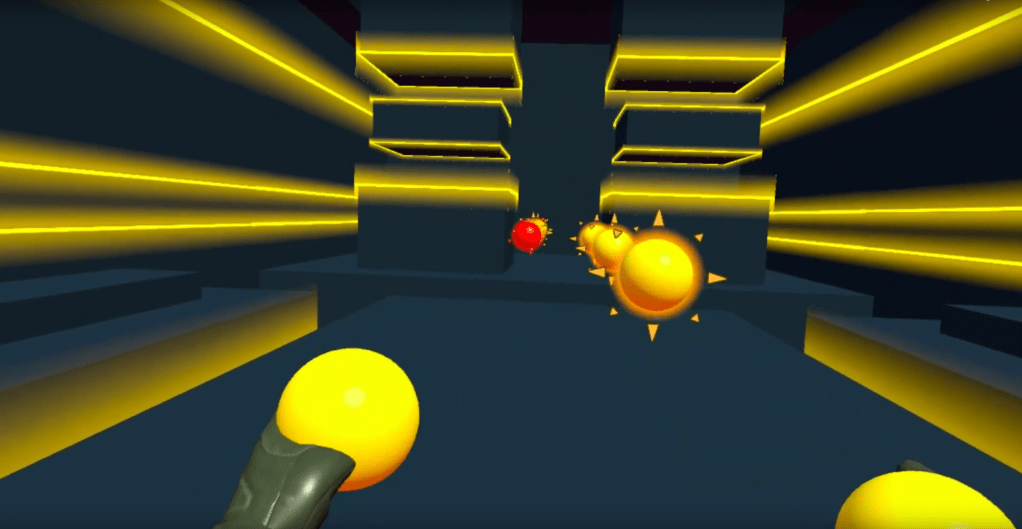

- Mini Games – Design a custom made rhythm game to provide a break for the user as well as repeatable training games

UX Process

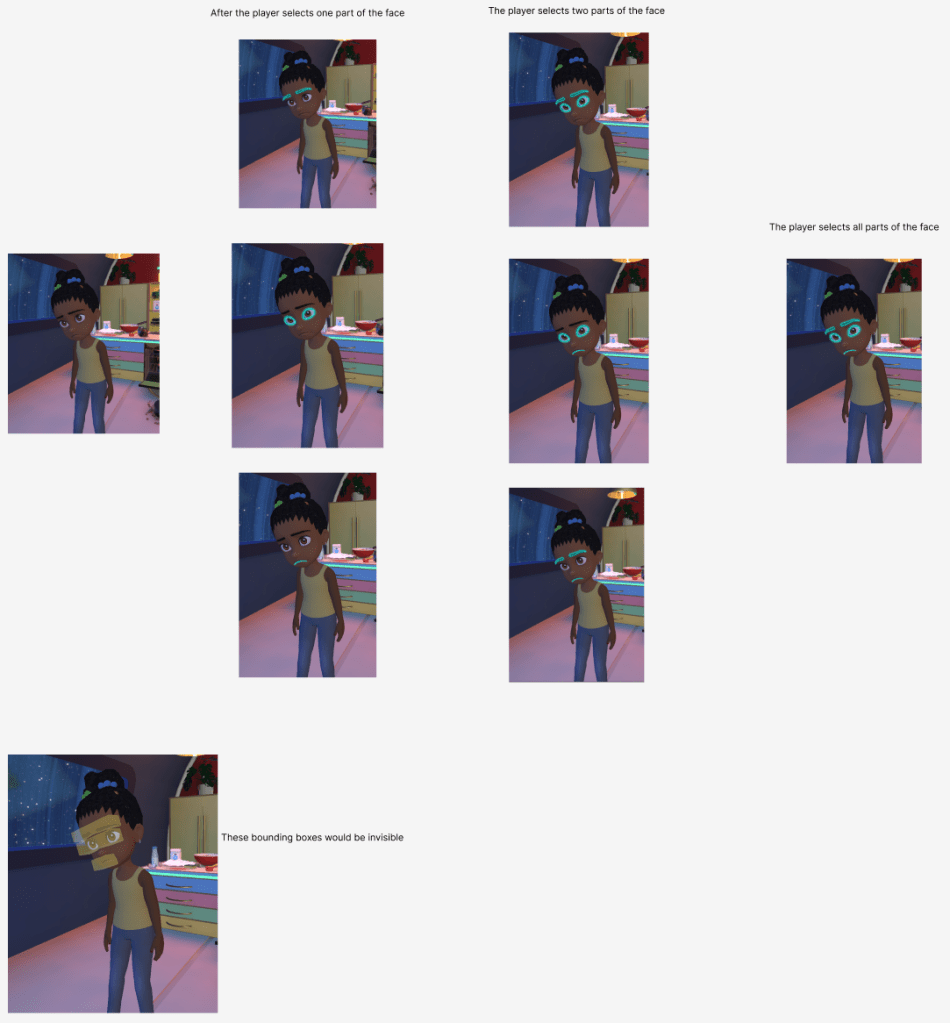

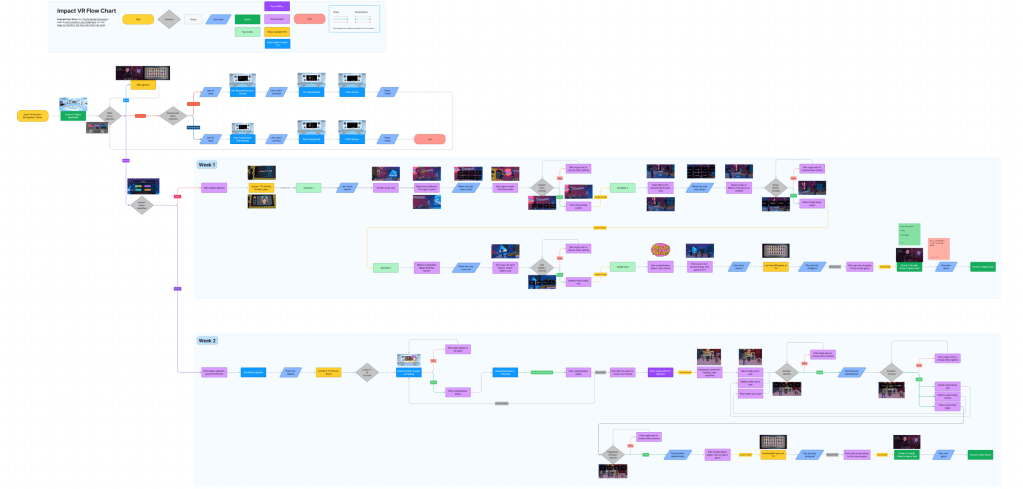

The application was is played across four separate sessions, each structured in a similar way to each other. Impact VR involved a player-centric approach to better teach children facial recognition and emotions. The content provided by VCU needed to be organized and iterated into manageable parts for the experience and user interface. There also needed to be visual consistency between each part, from the assessments to the ending mini game.

I collaborated with the project manager and 2D artist to create the interaction flow and UI design in Figma. There were multiple iterations to the application flow to better provide a seamless experience for the player following client review and user testing.

Development

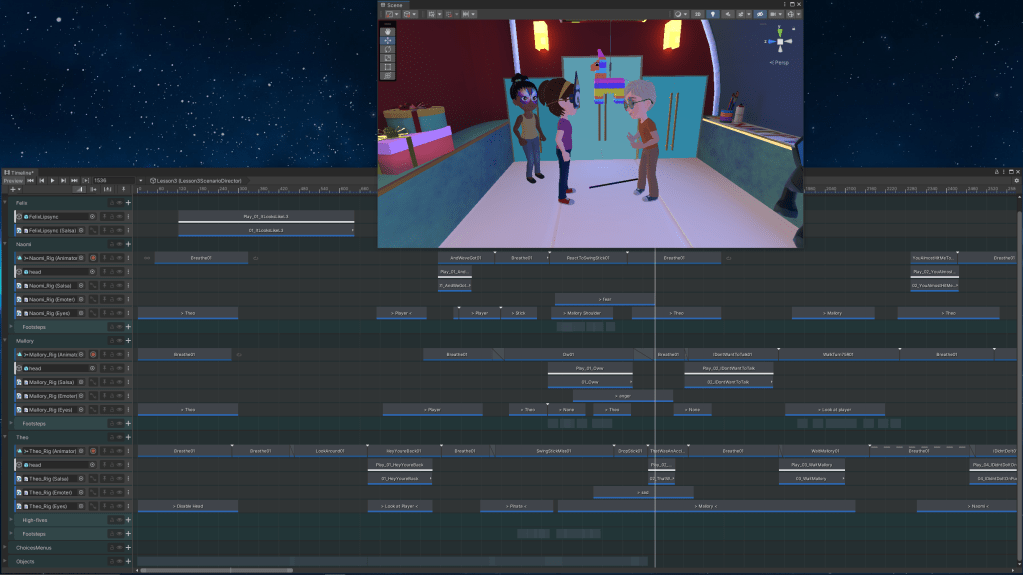

As lead developer for this project, I was in charge of implementing the entire project using Unity3D. I added all user interfaces, educational material, mini games, and voice-over clips. The most challenging part of the project was collaborating with the motion capture artist to coordinate how characters animated in the space according to the actions and character lines written in the script. Eventually, I would implement all of the animation clips and voice lines to the project using Unity’s internal sequencer tool called Timeline.

The data collection of the project was handled by outputting user answers from the randomly shuffled pre assessments and post assessments to an Excel spreadsheet saved internally on the stand alone headset.

Another important part of the project was the lip synchronization of the characters speaking along with their voice lines, as well as eye and look control. This was done with a lip sync suite program called SALSA and consisted of coordinating triggers with the Timeline tool to control where and when the character would change their gaze as well as what emotion the character would display on their face.